System Requirements

Recommended Specifications

When configuring your system, physical or virtual, please make sure that it meets the recommended system requirements:

- 1-2 CPU - LiquidFiles is not a CPU intensive application.

- 2 Gb of RAM - You can use as 1GB of RAM for test installations and the system will work fine, but it will swap a bit and the interface might be a bit sluggish.

- 10 Gb disk + as much data as you need - when designing the system, this where things make a difference. To get optimum performance, please use high speed disks. Avoid shared disks and RAID 5 if you can and if performance is critical.

Additional Considerations in Virtual Environments

When configuring your Virtual Environment, please consider the following:

- In your Hypervisor — Select Linux, Ubuntu, 64 bit when installing LiquidFiles as the setting what operating system will be used. Please note that this is the setting in your hypervisor to advise what guest what the operating system will be running. This is not an instruction to pre-install Ubuntu (or any other OS). You have to use either the LiquidFiles ISO, VMware or Hyper-V packages when installing LiquidFiles and they already come with the OS included. It is not possible to install LiquidFiles on a system with an OS already installed.

- Make sure that any "helpful" "easy install" options are disabled in your Hypervisor. At least some versions of VMware and Xen has this option and when selected, they will install what they think is needed for a default Ubuntu installation. But we're not installing a default Ubuntu installation so this will leave a lot of critical components uninstalled.

- In Xen, please select "Other install media" instead of "Ubuntu" and the system will install correctly.

- On Hyper-V hosts the Generation 1 VM with the Standard Network Adapter (defaut) has to be configured.

Supported Hardware

Please see the list of supported hardware for Ubuntu 22.04 LTS at https://ubuntu.com/certified. Any listed/certified hardware is supported for LiquidFiles as well.

Please note that it's not possible to install LiquidFiles on a system that's already been installed. LiquidFiles comes with the operating system included. That operating system is Ubuntu 22.04 LTS.

Optimizing Performance

When looking at performance optimizations, it is important to understand how the system works and what we're trying to achieve. First, performance optimization is almost always in reality about removing bottle necks, or rather, actively choosing what is going to be the limiting performance metric.

We get many support request asking things like how much would performance improve if we increase the memory from 2 Gb to 4 Gb and the answer is in almost all installations there would be no performance improvement what so ever.

The reason is simply that LiquidFiles is different than most other products. If we look at a CRM system for instance, a very typical intranet application, all the data is stored in a backend database and all day long users are going to access the data, perform searches against the database and look at different views of the database, and so on. By increasing memory, the system will be able to keep more of the data cached in RAM for faster access. For when the data needs to be searched, this is often a high CPU intensive process so for a system like this, increasing CPU and memory will almost certainly result in a lot of performance improvement.

If we then look at the use case in LiquidFiles. Users will use LiquidFiles to send files many Gigabytes in size. These files could well be 10Gb+ in size and we don't even attempt to cache these data files in RAM. During the actual data transfers, the connection happens from the users browser (or one of the available plugins) directly to the high performance web server in large sequential blocks. Neither the web application nor the database is involved in any of the actual data transfer and it hardly takes any CPU dealing with these large sequential file blocks. This is how most users are spending most of their time interacting with LiquidFiles. If we add more CPUs or RAM, it won't make data transfers any faster as that's not where the limiting factor is.

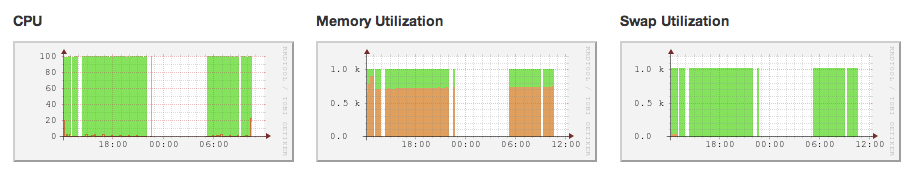

The only time where adding more RAM is going to make the system faster is if the system is swapping heavily. If you go to Admin → Dashboard, you will find graphs similar to this:

This particular system has 1Gb of RAM allocated to it. As you can see the memory usage is about 600-700 Mb with 300-400Mb available. The graph to the right tells that there's no swapping going on. If we increased the memory in this system to 2Gb, the only thing that would happen is that the available memory would increase from 300-400 Mb to 1300-1400 Mb and having more available memory just wouldn't improve performance at all.

The same can be seen for the CPU utilization to the left. We can see that this system hardly uses any CPU at all.

If we then go back to the statement of actively choosing a limiting performance metric. In LiquidFiles, for highest performance, we want the limiting factor to be either the disk or network speed. This is going to happen once we make sure that the CPU load is at a reasonable level and that the system is not swapping, or swapping very little (swapping less than 10% if the available RAM is not really affecting performance).

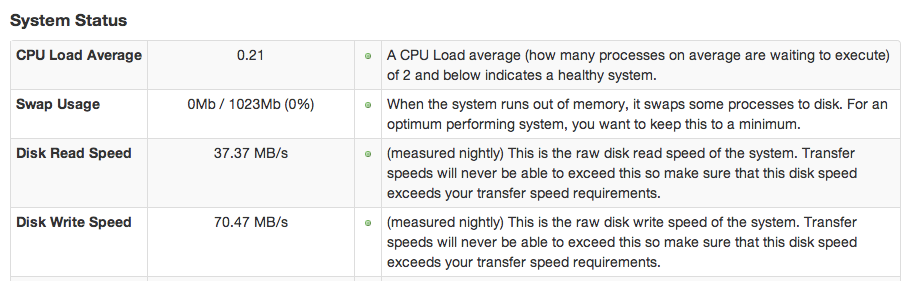

If you go to Admin → Status, you will see something like this:

First, you will see yet another view of the CPU and Memory status. If the CPU load in Admin → Status is below 2 and the system isn't swapping, adding more CPUs or Memory won't improve performance significantly.

The next two rows have the disk read and write speeds. If this system is connected to a gigabit network (that would be capable of transmitting about 150 MB/s), the disk speed of in this case 37 and 70 MB/s would be the limit of how fast transfers to and from this LiquidFiles system would be. The disk speed is measured nightly (so the figures could well be skewed if the disk is shared with other systems that run backups at the same time but have less load during normal working hours).

If we wanted to improve performance of this system, we would have to improve disk performance to get the read and write speeds above 150 MB/s at which point the Gigabit network would be the limiting factor (assuming we can get the full 1Gbps available when using LiquidFiles). If this LiquidFiles system was installed on a physical system, we just would have to purchase faster disks.

If we're running, like most organisations are, LiquidFiles in a shared virtual environment, we have more options available. Since we already discussed, most systems are limited to RAM and CPU speeds, disks are not often optimized for speed. Most organisations use some form of RAID configuration, often RAID 5 or similar with disk arrays that will give a high level of redundancy with the maximum amount of storage available, at the cost of performance.

When designing a disk system for performance, the best option for most organisations would be to split the disks into space optimized and performance optimized areas, or disks. The performance optimized disks are either striped or mirrored disks which will have a much higher level of performance with a trade off of space optimization. The performance optimized disks would then be used for systems like LiquidFiles and as temporary storage volumes for other systems that require high performance disks.

To continue, please select an installation option: